As the version numbers of software and services have crept into the public conscience, the influence of marketing has moved into the numbering process. When version identifiers no longer communicate anything besides the passage of time or marketing campaigns they are just vanity numbers.

Identifying versions of software and services can be tricky business with increasingly longer strings of digits, letters, and punctuation. Consider a version such as “1.15.5.6ubuntu4”. Ubuntu or Debian package maintainers may feel right at home, but even software engineers like myself can get lost after the first or second dot.

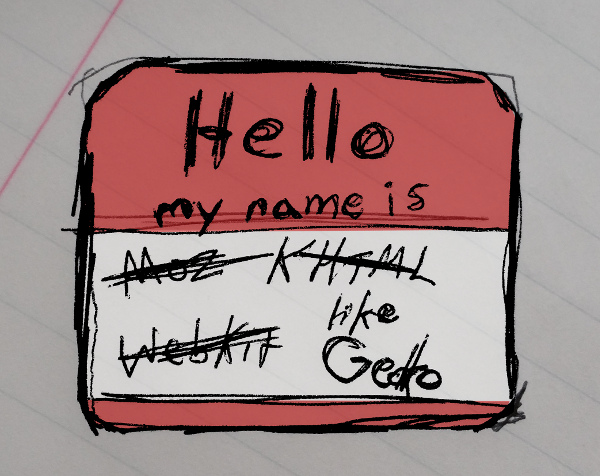

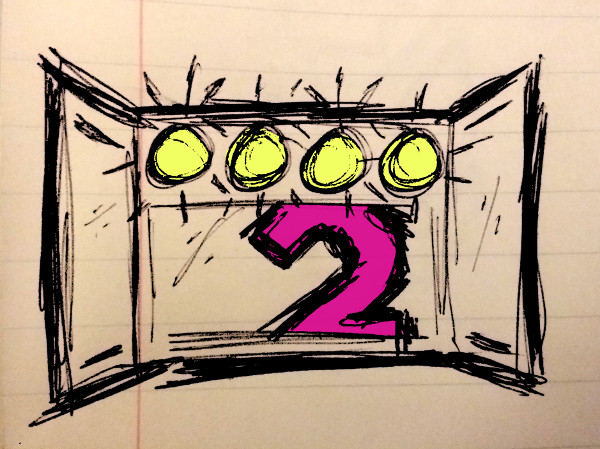

Software versions often begin innocently enough: “1” being the first official version. Decimal digits afterward indicating incremental change. Changes to the significant digit were often significant, noteworthy changes in the software behavior, capability, and/or compatibility. Sadly I fear the marketing hype that accompanied the Web 2.0 movement and Google’s Chrome browser have increased the popularity of vanity version numbering.

Sequels to movies and games are common, and when you see a number next to the title it provides instant context. You know that there may be some back-story, content, or previous experience awaiting as you encounter the 3rd or 4th release of unfamiliar franchises. While numbers have fallen out of fashion in film and games, replaced with secondary titles, they served their role well enough. And releases within a franchise like Ironman are more individual products than versions of a single application like Internet Explorer.

Regardless, a user seeing “Opera version 15″ might not realize that upgrading from 12 means more than just the usual “better than before”. Version 15 saw Opera radically change in how it displays pages, handles e-mail, and the add-on capabilities available. This release was clearly introducing breaking changes; something I consider the most important thing versions should communicate. Yet their pattern before version 15 led users to believe the first number was not so significant.

Version numbers, or technically identifiers since they’re not always strictly numeric, can communicate a lot of different things:

- Compatibility and incompatibility

- Addition or loss of capabilities

- Tweaks or fixes

- Package information

- Passage of time

- Progress toward the first release (like 0.9 for 90%)

- Revisions internal to the project

- Start of a new marking campaign

I’d argue that compatibility, capabilities, and fixes are the most important; prioritized in that order. Which is why I think the Semantic Versioning concept is necessary. Despite being designed for Application Programming Interfaces, the behind-the-scenes components that make the ‘cloud’ and software tick, it is sorely needed in user-facing products like Internet browsers too.

Semantic Versioning’s goal of clearly communicating to machines and programmers can also help users understand potential consequences once they know the pattern. And it can be done easily, succinctly, and before they actually choose to update or install.

Ironically it’s the API’s which users do not see that tend to be the most semantic or consistent, at least in their end-point URI’s. These often contain the major version number clearly embedded like “v1” in “api.example.com/v1/”. My experience developing API’s has been that only the major version should be embedded in the URI, but minor and patch fragments can be useful for informational purposes.

One interesting hybrid scheme is Java. It’s major and minor version numbers are semantic with major technically remaining at ‘1’. And at least up until version 8, the latest as of this writing, it has remained largely backward compatible with the first official release. The minor version increases as capabilities are added: 1.1, 1.2, 1.3 … 1.8. Yet since 1.2 it has been marketed using only the minor number. Articles referring to “Java 7” or “Java 8” are technically referring to 1.7 or 1.8 respectively. Sadly the patch (a.k.a. update) version for Oracle’s official releases have gotten complicated.

If you are one of those privileged individuals choosing a version number please don’t get caught up in the hype. Let the needs of your users determine what is appropriate. And as I have the opportunity I’ll try to do the same.

Have you chosen a version identifier? Do you have some thoughts to share? If so please consider commenting.